Johann Lee

Recent Interests

- Training small LLMs to reason using synthetic datasets (PI: Prof. Kilian Weinberger)

- Speeding up cloud LLM inference with intra-GPU memory offloading (PI: Prof. Rachee Singh)

- $.99 bias in data labeling pricing (advised by Dr. Bart De Koning)

- Come talk to me about my startup!

Publications

-

ICML

ICML 2025 (International Conference on Machine Learning)ICLR 2025 Workshop

ICML

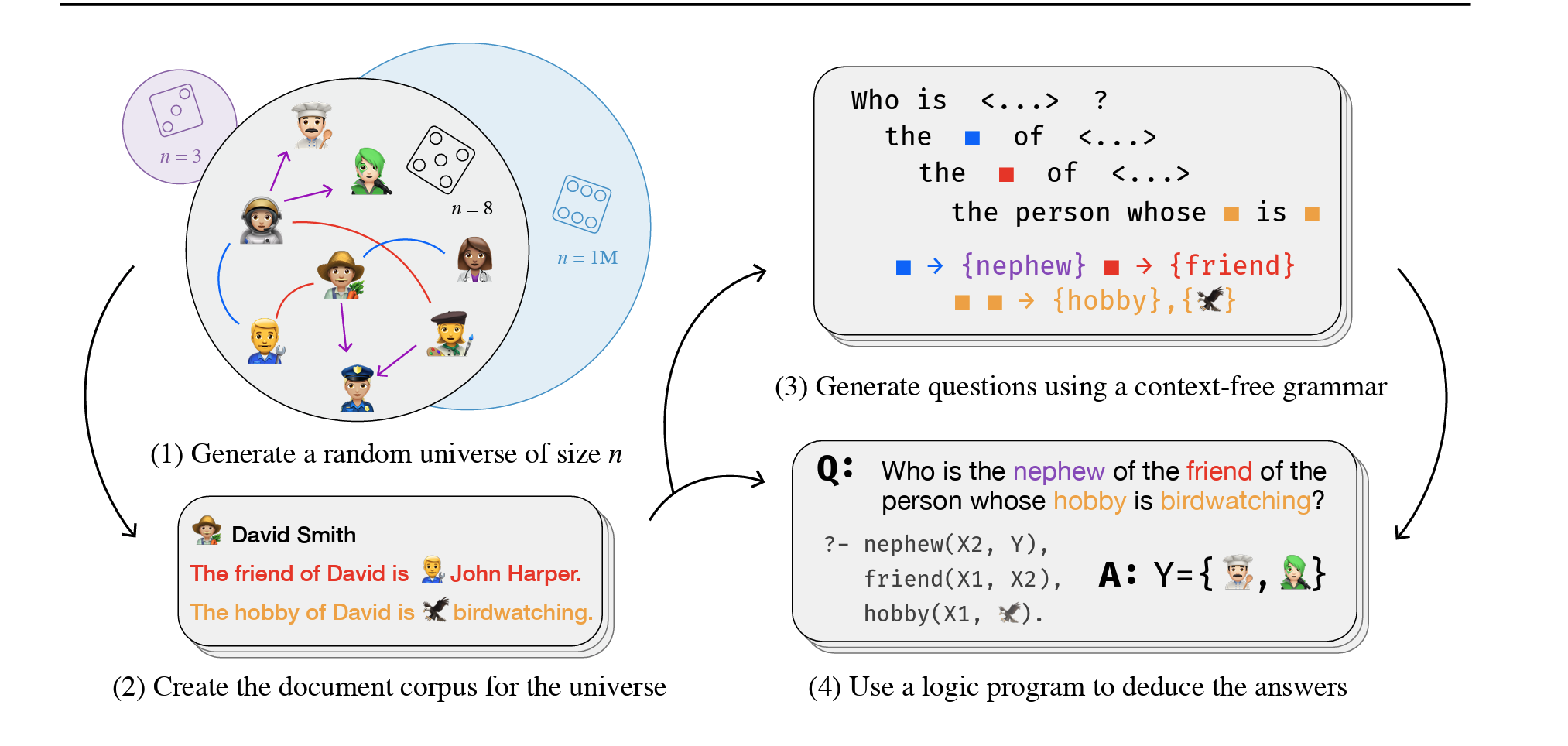

ICML 2025 (International Conference on Machine Learning)ICLR 2025 WorkshopWe adresses evaluation datasets getting scraped into pretraining data with a pipeline for generating synthetic datasets (for reasoning and retrieval evaluation). We demonstrate its scraping resilience, and use it to identify improvements in RAG and agentic methods: subtask composition, multi-branch reasoning, and needle-in-a-haystack retrieval.

-

Stanford GRACE Journal Vol. 3 No. 1 (2025): Generative AI and Global FuturesTop 0.5% of 3000+ submissions

Stanford GRACE Journal Vol. 3 No. 1 (2025): Generative AI and Global FuturesTop 0.5% of 3000+ submissionsSince initiatives for measuring safe, ethical AI are scattered and fragmented, this review outlines state-of-the-art methods, their proper utilization, and systemic weaknesses and scaling issues. By doing so, we seek to foster continuing discourse and innovation among both technical developers and non-technical policymakers.